Hear Perry Mulligan & Sumit Sharma explain MVIS value proposition and product strategy for AI at the Edge at Northland Capital Markets AI Virtual Call Series:

Recording

Official Transcript

Mike Lattimore, Northland Capital: [Introductory comments]

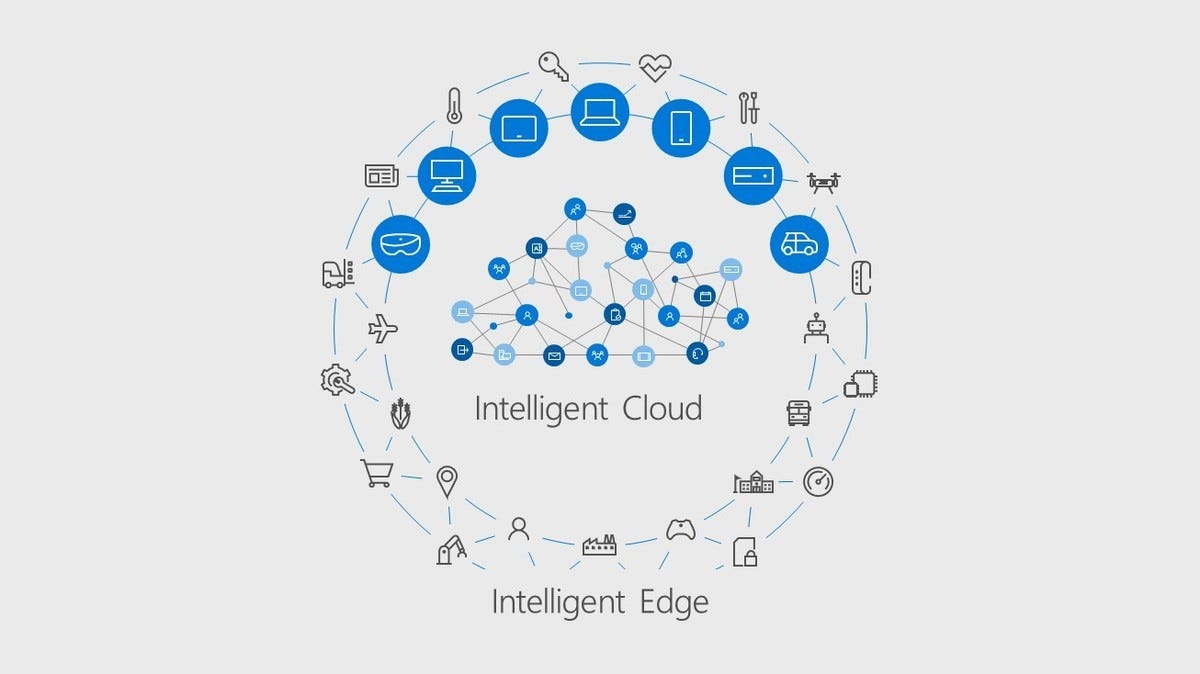

Perry Mulligan, CEO MicroVision: If you think of it today, for many consumers in the space, we recognize that AI represents this beautiful brain somewhere isolated in the cloud, and they have the ability to speak to it, and it answers some simple questions and performs some simple tasks.

We're absolutely convinced that we can enhance that experience by providing sight, sound, gesture recognition interactivity and 3D sensing to that brain to help put things in context. So, really making that brain much more aware of that environment from which you are posing those questions or interacting with it, and subsequently making it much easier for users to interact with this great tool, this great platform.

Mike Lattimore: That sounds really attractive actually. Makes a lot of sense. AI can mean a lot of different things. When you talk about AI, how do you define it and what technologies are in your product, versus something that's in the cloud?

Perry Mulligan: Let's treat that as a two-part question, I'll give you the layperson version of it first, and then I'll ask Sumit to explain with a little more granularity the subtleties of what's in our product. When we think of AI, we absolutely believe that AI is the product. We think of AI as a very ubiquitous high neural net compute engine that's available through the cloud, and we are providing at the peripheral, compute and sensory inputs to it.

So I hope that puts it in context, for us, we consider AI the product. And now we're here about enhancing the ability of that wonderful ecosystem to interact with people more readily and easily, make it easier for people to interact with that platform.

Relative to the question about what is unique, what level of machine intelligence, machine learning is in our product, I'll let Sumit give you a little bit of color on that.

Sumit Sharma, COO Microvision: To add to what Perry said, if you think about AI, which right now in the market is Alexa, Google Assistant, Bixby, wide variety of services, these are all cloud computing services.

Where is the Edge computing now? If you think about our module, think of it more like an Edge computer, an Edge computer that is an I/O point for the actual user before the cloud service can actually infer something, it needs more data, something specific about the situation, about the user. That cannot be inferred by their Google searches, or their purchasing habits alone. It's something specific a service that's needed instantaneously.

The way we think about this, the way I kind of describe this internally as well is, AI is the product, it is the cloud computing platform. It can do lots. It's gonna have voice today. It's gonna have more services. But the voice is the interaction point that it requires. So, Amazon Voice Services for example, that is just the beginning. The brain by itself in the cloud is gonna expand with more services. More that it can infer. But they need more input. And the input of course for humans, is very simply, visual and touch. So data, structured any kind of way, at any kind of instant allows them to infer more things about that user, and actively provide services to them.

So, in our module, we don't use the word AI for our the module, even though we have machine learning inside, because it's very specific to what the sensor is, to what the interaction is, we're adapting the user experience to get very specific user data, and allowing the AI to infer from that.

It's taking the load that would be required to take raw user data, supply to the cloud, churn through millions of data points, parse all that through and then provide a service, it's to have a subset of machine intelligence embedded inside our module that actively allows the AI to adapt the service.

Mike Lattimore: That's very great, that's very good. Just to make it even more clear, what would you say your machine learning technology which is in your sensors, what would that do, vs.

what would occur in the cloud? What are some of the things that your sensors take off the cloud load?

Perry Mulligan: In the simplest of terms, when we think of what we can provide at the sensor, we have to first start off with the understanding that we're coming from the solution with a wonderful advantage. Our LIDAR capabilities have a point cloud density that just makes it very easy for us to do things like object recognition, predictive travel, to understand what motion looks like through the field that we see it happening, so we can apply machine learning from a predictive perspective.

So we can do relatively low power, simple analytics at the device, instead of, as Sumit points out, sending massive amounts of data to the AI network. And that's relevant whether we're dealing with AI in the cloud, or whether we're dealing with the fusion of sensors in the automotive environment. We're simply combining the characteristics of our device in a way that allows us to provide very near term messaging, it provides a latency advantage, that we think is a pretty interesting perspective.

Maybe I'll let Sumit expand on that, it's almost counter-intuitive. With more data, why is it easier to do this than it would be if you had less data?

Sumit Sharma: Think about the massive amount of data that we actually have inside of our silicon. We can infer quite a lot, with enough machine intelligence, just the right amount, we can infer things in milliseconds, or tens of milliseconds, where you can imagine just transporting the data the cloud, that would be hundreds of milliseconds, or seconds.

The interaction point for IoT products, is not a capacitive touch LCD. Those form factors, users don't want in their homes. This allows them to interact with a point, and the services are adapting on the fly. In our Consumer LIDAR, it's inferring things about space, space management. But in all of them, the common theme is, in the Edge computer, which is our module, our Edge computing, we infer things that are very important to the AI to provide a more streamlined service, at a faster pace.

Of course, the raw data that's inside there, we can certainly transport it, if they require it. But in all cases, that is a backup. The primary thing is low latency, the users want this magical feel. Even though the AI is in the cloud, they want it as if it is present right there.

Mike Lattimore: In terms of the processing in the silicon, are there mini neural networks that's doing the processing, or what's the actual functionality that's going on there?

Sumit Sharma: That depends on the product. It all depends upon, in some cases, the kinds of things we're doing, how many teraops are needed to do the kinds of things we need to do. We can certainly use some commercial off-the-shelf silicon, but once we get to a certain point and we understand the mathematics, we incorporate that within our silicon. Adding GPUs and DSPs, we are a silicon company at the core, we know how to do that. But it's not just putting machine intelligence for the sake of putting machine intelligence into our devices. It is, what's actually relevant.

This would create a product that's lean, that's launchable, it's got the right price point, it doesn't have a whole lot of overhead, just the capabilities we need.

Perry Mulligan: One of the attributes of our sensing product is the fact that it's got temporal and spatial resolution capability. It's so important for people to realize, thinking of scanning a room. In most cases, the walls and the windows and the furniture are pretty static. So, we can now detect motion and clarify and focus our engine temporally and spatially on that motion.

So, when we talk about efficiencies, when Sumit talks about the data efficiency at the sensor, the Edge compute at the peripheral in our sensor, you get the perspective that we are well positioned to articulate what's happening temporally in that region at that time, and sending that message to the central processor that says, 'heads up, you may want to be aware'.

And let me be illustrative. If I can do that in the display engine, where I'm predicting what your finger is touching in an illuminated display, and obviously there's no capacitive touch on the tabletop, or the surface your projecting that image on, we're inferring that through time-of-flight, and machine learning where we are predicting your finger landed.

If that's relevant in simulating that interactive display, that capacitive touch display, at 70 miles an hour, do you think it's relevant to detect that the car beside you is going to swerve into your lane? It's going to cross over. The same algorithm, the same thought process or capability of this Edge compute is required to do both. In a cost effective low power way.

Sumit Sharma: Depending on the product vertical, the strategy changes. It depends upon what part of the silicon do you want to own, what somebody already has, right? If there's a 14 nanometer technology that can do this better, why would we do it? Because the 14 nanometer technology is in a $400 processor that even Nvidia is not going to be able to produce. So, it's all dependent on power and latency, that's the core of why this has to be more efficient. Nvidia's got a great platform, but the AI is what is gonna enable the entire platform. The same thing in our IoT products, people want a very very fast display, at a lower cost. So they can deploy more.

Mike Lattimore: In terms of understanding gestures, is there some level of accuracy that needs to be there before it's easy to use and widely reliable? What level of accuracy do you need and are we there yet?

Sumit Sharma: Let me put this in context: Think about the 50,000+ skills that are on Alexa right now. If you were to put them in some sort of buckets, you'll find that some of them, voice only is good enough. Some of them, voice and display is good enough. Some of them, voice, display plus touch is good enough. Some of them, voice, display, touch and gesture is required. There's a spectrum, it's not like all of them are required. That's context, that's important.

Over the weekend, I was reading something about Amazon Business. Amazon Business is a bigger opportunity, it's a $10B business they've created in four years, it's outpacing all their other businesses. The point was, this is different interaction points, not what I do with my Alexa at home, these are different kinds features that will be needed. And gestures, are not just hand gestures. Lots of things can be called gestures. Robots can do gestures. Lots of things could be done, right? So we need enough machine learning inside so that we can adapt as their platform adapts.

The point I'm making is that the skills are going to keep growing, and that's just Alexa. You can imagine the actions in Google Assistant, and other platforms will grow. You need something developed that you don't have to, every year, refresh that. And machine learning allows us to adapt to the situation, to the very specific business that our customers may want to attend to.

So, the accuracy is good enough, we can demonstrate that. The case is, what's the application they want to do? And is the platform we're creating scalable?

Perry Mulligan: I think Mike, if you look at the last video we put on our website, it sort of indicates the speed and latency of responsiveness to touch. By the time you come to CES this year, I think you'll see, again, what will approach seamless interaction between the human and the projection system. We believe we have that nut cracked. That's not something that's causing us heartburn.

Mike Lattimore: On that video you posted on your website recently, could you just talk a little bit more about that, it seems like that's something that holds a lot potential for you. What is the technology you're offering there vs. the smart speaker itself or the cloud service?

Perry Mulligan: Well, at the simplest point, what we're trying to illustrate is, what's the smart speaker market today, I think it's 40, 50 million units deployed. Obviously people have found use and application for a voice activated smart speaker. What we're showing though, is that the ability to do multi-stage transactions, multi-level transactions, are so much more readily and easily accomplished if you have the ability for touch and display combined with voice.

So, one of the tests we try to do is, if you're a busy person, can you order $100 worth of groceries in four minutes? Can you select a multi-dimensional menu item quickly? And it's not a question of whether or not these of themselves are benchmarks or bellwethers, they just illustrate how much simpler it is to accomplish. I've had friends comment that while they own all of the voice activated technologies, they'd be worried about ordering an Uber without being able to visually see on the map where it's going. So the notion of purchasing something without visually seeing that the platform has got the quantity correct, that the resultant value is correct, the MLQ is correct, these things just become a much more natural experience for us, and I think that's why we've come back with saying, it's not about displacing a solution, it's about enabling the user to interact more easily, because we know if it's easier to interact, it becomes easier to transact. And that's how our customers are going to make money.

Sumit Sharma: To add slightly more to that is, displays, capacitive touch displays, Amazon Show was launched, there is product that is out there. But yet, none of them are taking off as much as the smart speaker by themselves. As Perry mentioned, 40-50 million approximately is the install base, and very few of them are displays. There's a fundamental issue with the demographic, how they want to interact with information. The point was that, nobody wants a computer in their homes or their offices with a camera that's monitoring, that's not what they want. They want a technology that's a disappearing display with all the benefits. So we're not displacing anything, nothing exists! There's no 20-inch capacitive touch display monitor product out there, we're not displacing anything. This is a completely brand new vector because the other one has plateaued. People do not want to actually get on it.

Mike Lattimore: That makes sense. Is the implication by putting this video out, and obviously a very attractive use case here, should we assume that one of the main development projects you're working on leads to a product like this next year?

Perry Mulligan: One of the things we've said several earnings calls ago, was that we are focused on AI platform owners and the advantage and disadvantage of that Mike as you know, is that there's only a handful of those in the world. And we're talking to all of them about these types of applications, and using these types of demos, to allow them to help monetize their huge investments.

If we think of AI as I described it earlier, a neural net in the cloud, as being a $19B spend this year for companies, growing to over $50B by 2021, the ability to monetize that investment, that's going to be critical for these large OEMs. And we think that it's sort of self-evident that the demos that we're doing and the product development we're talking of, enables them to monetize that.

Sumit Sharma: I think if you go as far back as CES 2017, we've actually been public about this direction, how the interaction would work out. This is just sort of showing the condensed version of it. Think about it more as, as we have different partnerships, everybody will have their own desire about how they want AI to be deployed out. What we're showing there is, exactly how anybody would monetize that. These are applications that we've talked about. It becomes very easy for somebody to infer from this video, that what kind of transaction and human interaction is needed, and is that something valuable.

And I think that Taco Time, is one of my favorite ones. I've talked about it for a long time, publicly and privately. But it's not fantasy, right? This is actually something that most folks would think that, when you're on the fly, it's a very simple thing. The entire script is slow because we're explaining things, but if you did it privately, it moves fast. And you can go from anywhere from $100 to $200 worth of orders without ever logging on to anything. It is on the fly. You can infer from that kind of application and use cases that will actually enable this market.

Mike Lattimore: And you'll probably just order more if it's easier to do. Right before you presented, we had iRobot on, and they're kind of like almost a mini autonomous car now. In terms of smart home technologies, consumer robots, smart gesture sensing technologies in a home security scenario, how do you think about those opportunities?

Perry Mulligan: Maybe if I could, Mike, we're always challenged to explain this to our families, what the heck do we do? And the last time I was asked to explain this, I said, think of our Grandmother Nan is coming to visit. And we tell the AI platform that Nanny's in the house. And it knows where the couch is, and it knows where the floor is, and it knows what the room looks like. And if Nanny's having a snooze on the couch in the afternoon, it's OK because sometimes she does that. But if Nanny is lying on the floor, maybe it might want to call her name, or call 911.

So, that spatial awareness, that whole context of being able to put environmentally, a situation into perspective, is sort of the difference between taking a picture of the room, and standing at the doorway of the room. Your awareness of what's going on is very different.

Sumit Sharma: To add, in robotics, there's a lot of different applications, certainly you could have Interactive Projection. But primarily what Perry is talking about is our Consumer LIDAR product. For example, iRobot wants mapping services, cloud mapping services, right? You need some sort of sensor with a very low compute threshold, on the Edge, some module, our Consumer LIDAR products are for those kinds of services.

The specific application that Perry talked about, we've really thought through that internally. You could not enable that with a camera module. You could not enable that primarily with Interactive Projection, but our Consumer LIDAR product, which if you take the display away, is just the LIDAR portion of it, it enables that robot to do something. What iRobot is making is a consumer product. We are a module supplier. So we enable them to do more, without having to use an i7 processor to take a lot of information and locally do a lot of processing, so their overall system could be smaller and with a lower cost. So that's the philosophy behind all the different verticals.

Perry Mulligan: We believe that our technology will offer performance that is equal to or better, at a lower compute, at a lower cost. So while I fully understand there's many different competing solutions available, that's what we think we can bring with our Consumer LIDAR solution.

Mike Lattimore: Are there any components in the supply chain that you feel are too high cost for a specific use case? Or do you feel that the components of the supply chain that you need are readily available?

Perry Mulligan: Mike, I'll just sort of remind folks that I only spent 30 years in supply chain and operations. So anything above zero is too expensive, and infinity is the quantity I want [laughter]. Having said that, we've got this wonderful opportunity here that says, we're in the right place at the right time. Our technology leverages standard industry process, standard industry technologies, we're dealing with world class suppliers that have the ability to ramp as we see fit, and you know and I know that when we're talking to these large platform owners, you don't end up talking in six figures worth of volumes, you talk seven figure, eight figures kinds of volumes, because that's how they define success.

One of the advantages, and one of the things I'm absolutely pleased to remind folks of, is Sumit's been with us now for coming up on three years. And in that period of time, he's managed to transform what was the grad shop mentality within operations within our company, to something that passes the scrutiny of world class OEMs, and if we hadn't been able to pass that scrutiny, we wouldn't be at the levels of conversations we're having today.

So, we're primed, and ready to go.

Mike Lattimore: Awesome, that's great to hear! Anything else to touch on real quick here?

Perry Mulligan: I really appreciated you taking the time and giving us the opportunity to speak with you and your audience today, Mike. We're excited about where the company is positioned, we're excited about the future that we're facing. We respect that we came from a long history of display and we really believe that the laser beam scanning technology core that we have will allow us to leverage the LIDAR side of that equation well into the future.

Thanks again for the time, really appreciate it.

Comments

Post a Comment